How To Find Percentage Error In Physics

Measurements and Error Analysis

"Information technology is better to be roughly correct than precisely wrong." — Alan Greenspan

The Uncertainty of Measurements

Some numerical statements are exact: Mary has 3 brothers, and 2 + ii = 4. However, all measurements have some degree of uncertainty that may come from a variety of sources. The process of evaluating the uncertainty associated with a measurement upshot is oft called uncertainty analysis or error assay. The complete statement of a measured value should include an approximate of the level of confidence associated with the value. Properly reporting an experimental result along with its doubt allows other people to make judgments about the quality of the experiment, and it facilitates meaningful comparisons with other similar values or a theoretical prediction. Without an uncertainty judge, it is impossible to answer the bones scientific question: "Does my result agree with a theoretical prediction or results from other experiments?" This question is fundamental for deciding if a scientific hypothesis is confirmed or refuted. When we make a measurement, we generally assume that some exact or true value exists based on how nosotros define what is existence measured. While we may never know this true value exactly, we endeavor to find this platonic quantity to the all-time of our ability with the time and resource available. Equally nosotros make measurements past different methods, or even when making multiple measurements using the aforementioned method, we may obtain slightly different results. So how do we report our findings for our best estimate of this elusive true value? The most mutual style to bear witness the range of values that we believe includes the true value is:

( ane )

measurement = (best approximate ± dubiousness) units

Let'south take an example. Suppose you desire to observe the mass of a gold band that you would like to sell to a friend. Yous exercise non want to jeopardize your friendship, then y'all want to become an accurate mass of the ring in order to charge a fair market price. You estimate the mass to exist betwixt x and 20 grams from how heavy it feels in your manus, merely this is not a very precise estimate. Subsequently some searching, yous find an electronic residual that gives a mass reading of 17.43 grams. While this measurement is much more precise than the original estimate, how do y'all know that it is authentic, and how confident are y'all that this measurement represents the true value of the band's mass? Since the digital display of the rest is express to 2 decimal places, y'all could report the mass equally m = 17.43 ± 0.01 1000. 17.44 ± 0.02 chiliad. Accuracy is the closeness of understanding betwixt a measured value and a truthful or accepted value. Measurement error is the amount of inaccuracy. Precision is a measure of how well a event can be determined (without reference to a theoretical or truthful value). Information technology is the degree of consistency and agreement amongst independent measurements of the same quantity; also the reliability or reproducibility of the result. The dubiety estimate associated with a measurement should account for both the accurateness and precision of the measurement.

( 2 )

Relative Dubiety =

doubtfulness measured quantity

Example: m = 75.5 ± 0.v g = 0.006 = 0.7%.

( 3 )

Relative Error =

measured value − expected value expected value

If the expected value for thousand is fourscore.0 g, then the relative error is: = −0.056 = −5.6% Note: The minus sign indicates that the measured value is less than the expected value.

Types of Errors

Measurement errors may be classified as either random or systematic, depending on how the measurement was obtained (an instrument could cause a random error in ane situation and a systematic error in some other).

Random errors are statistical fluctuations (in either direction) in the measured information due to the precision limitations of the measurement device. Random errors can be evaluated through statistical analysis and can be reduced by averaging over a large number of observations (run across standard error).

Systematic errors are reproducible inaccuracies that are consistently in the same management. These errors are difficult to find and cannot be analyzed statistically. If a systematic error is identified when calibrating confronting a standard, applying a correction or correction factor to compensate for the issue can reduce the bias. Different random errors, systematic errors cannot be detected or reduced past increasing the number of observations.

When making careful measurements, our goal is to reduce as many sources of error as possible and to keep track of those errors that we can not eliminate. Information technology is useful to know the types of errors that may occur, so that we may recognize them when they ascend. Common sources of fault in physics laboratory experiments:

Incomplete definition (may be systematic or random) — 1 reason that it is impossible to make exact measurements is that the measurement is non ever clearly defined. For case, if two dissimilar people mensurate the length of the aforementioned cord, they would probably get different results because each person may stretch the string with a dissimilar tension. The best way to minimize definition errors is to carefully consider and specify the weather condition that could affect the measurement. Failure to account for a factor (usually systematic) — The most challenging part of designing an experiment is trying to control or account for all possible factors except the one independent variable that is being analyzed. For example, you may inadvertently ignore air resistance when measuring gratis-fall acceleration, or you lot may fail to account for the effect of the Earth's magnetic field when measuring the field about a small magnet. The best manner to account for these sources of error is to brainstorm with your peers about all the factors that could possibly affect your result. This brainstorm should be washed before beginning the experiment in order to plan and business relationship for the confounding factors before taking data. Sometimes a correction can be applied to a result later on taking information to account for an error that was not detected earlier. Environmental factors (systematic or random) — Be aware of errors introduced by your immediate working surroundings. Yous may need to take account for or protect your experiment from vibrations, drafts, changes in temperature, and electronic dissonance or other effects from nearby apparatus. Instrument resolution (random) — All instruments have finite precision that limits the power to resolve small measurement differences. For instance, a meter stick cannot be used to distinguish distances to a precision much amend than about half of its smallest scale division (0.5 mm in this case). Ane of the best means to obtain more precise measurements is to use a naught difference method instead of measuring a quantity directly. Cipher or remainder methods involve using instrumentation to measure the difference between two similar quantities, one of which is known very accurately and is adjustable. The adjustable reference quantity is varied until the difference is reduced to zero. The two quantities are then counterbalanced and the magnitude of the unknown quantity can be plant past comparing with a measurement standard. With this method, problems of source instability are eliminated, and the measuring instrument tin can be very sensitive and does not even need a scale. Calibration (systematic) — Whenever possible, the calibration of an instrument should exist checked before taking information. If a calibration standard is not available, the accuracy of the instrument should be checked by comparing with another instrument that is at least as precise, or by consulting the technical data provided by the manufacturer. Calibration errors are usually linear (measured as a fraction of the full scale reading), so that larger values upshot in greater absolute errors. Aught offset (systematic) — When making a measurement with a micrometer caliper, electronic balance, or electric meter, ever bank check the aught reading beginning. Re-zero the instrument if possible, or at to the lowest degree measure and tape the zero showtime then that readings tin be corrected later. It is also a good idea to check the zero reading throughout the experiment. Failure to cypher a device will effect in a constant error that is more significant for smaller measured values than for larger ones. Physical variations (random) — Information technology is always wise to obtain multiple measurements over the widest range possible. Doing and then often reveals variations that might otherwise go undetected. These variations may call for closer examination, or they may exist combined to observe an average value. Parallax (systematic or random) — This error can occur whenever there is some distance between the measuring scale and the indicator used to obtain a measurement. If the observer's eye is non squarely aligned with the pointer and calibration, the reading may exist too loftier or low (some analog meters have mirrors to help with this alignment). Instrument migrate (systematic) — Well-nigh electronic instruments accept readings that migrate over time. The amount of drift is generally not a business organization, but occasionally this source of error can be pregnant. Lag time and hysteresis (systematic) — Some measuring devices crave time to attain equilibrium, and taking a measurement before the musical instrument is stable volition result in a measurement that is besides high or low. A mutual example is taking temperature readings with a thermometer that has non reached thermal equilibrium with its environs. A like effect is hysteresis where the instrument readings lag behind and appear to have a "retentiveness" upshot, equally data are taken sequentially moving up or down through a range of values. Hysteresis is most normally associated with materials that become magnetized when a changing magnetic field is applied. Personal errors come from carelessness, poor technique, or bias on the part of the experimenter. The experimenter may measure incorrectly, or may use poor technique in taking a measurement, or may introduce a bias into measurements past expecting (and inadvertently forcing) the results to concord with the expected outcome.

Gross personal errors, sometimes chosen mistakes or blunders, should exist avoided and corrected if discovered. As a rule, personal errors are excluded from the mistake assay discussion because information technology is by and large assumed that the experimental result was obtained by post-obit correct procedures. The term homo error should also be avoided in fault analysis discussions considering it is as well full general to be useful.

Estimating Experimental Uncertainty for a Unmarried Measurement

Whatever measurement you make will have some uncertainty associated with it, no matter the precision of your measuring tool. So how exercise you determine and report this doubt?

The uncertainty of a unmarried measurement is limited by the precision and accuracy of the measuring instrument, forth with whatever other factors that might bear upon the power of the experimenter to make the measurement.

For example, if you are trying to use a meter stick to measure the diameter of a lawn tennis ball, the doubt might exist ± 5 mm, ± ii mm.

( iv )

Measurement = (measured value ± standard uncertainty) unit of measurement

where the ± standard uncertainty indicates approximately a 68% confidence interval (see sections on Standard Divergence and Reporting Uncertainties). half dozen.7 ± 0.2 cm.

Example: Bore of tennis ball =

Estimating Uncertainty in Repeated Measurements

Suppose y'all time the period of oscillation of a pendulum using a digital instrument (that you assume is measuring accurately) and observe: T = 0.44 seconds. This single measurement of the period suggests a precision of ±0.005 due south, simply this musical instrument precision may not give a complete sense of the incertitude. If you repeat the measurement several times and examine the variation among the measured values, you lot can get a better idea of the uncertainty in the period. For example, here are the results of 5 measurements, in seconds: 0.46, 0.44, 0.45, 0.44, 0.41.

( v )

Average (mean) =

x one + x 2 + + x N

N

For this state of affairs, the best estimate of the menstruum is the average, or hateful.

Whenever possible, echo a measurement several times and average the results. This average is generally the best approximate of the "true" value (unless the data set is skewed by one or more outliers which should exist examined to determine if they are bad data points that should be omitted from the boilerplate or valid measurements that crave further investigation). Generally, the more repetitions you lot make of a measurement, the meliorate this estimate volition be, only be careful to avert wasting fourth dimension taking more than measurements than is necessary for the precision required.

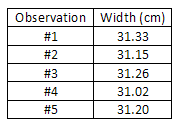

Consider, as another instance, the measurement of the width of a piece of paper using a meter stick. Being careful to continue the meter stick parallel to the edge of the paper (to avoid a systematic error which would crusade the measured value to be consistently higher than the correct value), the width of the newspaper is measured at a number of points on the sheet, and the values obtained are entered in a data table. Annotation that the last digit is simply a crude gauge, since it is difficult to read a meter stick to the nearest tenth of a millimeter (0.01 cm).

( 6 )

Average =

= = 31.19 cm sum of observed widths no. of observations

This boilerplate is the all-time available estimate of the width of the slice of newspaper, but it is certainly non exact. We would have to boilerplate an space number of measurements to arroyo the truthful mean value, and even then, we are not guaranteed that the mean value is accurate considering there is yet some systematic error from the measuring tool, which can never be calibrated perfectly. So how do we express the uncertainty in our boilerplate value? 1 mode to express the variation among the measurements is to use the boilerplate departure. This statistic tells united states of america on boilerplate (with 50% confidence) how much the individual measurements vary from the mean.

( vii )

d =

|x 1 − x | + |ten two − x | + + |ten Northward − x |

N

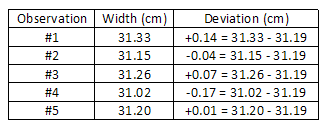

However, the standard difference is the almost common manner to characterize the spread of a information set up. The standard divergence is always slightly greater than the boilerplate deviation, and is used because of its association with the normal distribution that is ofttimes encountered in statistical analyses.

Standard Deviation

To calculate the standard divergence for a sample of Due north measurements:

-

1

Sum all the measurements and divide by N to get the average, or mean. -

2

Now, subtract this boilerplate from each of the North measurements to obtain N "deviations". -

3

Foursquare each of these N deviations and add them all up. -

iv

Split this event past( N − i)

and take the square root.

We can write out the formula for the standard difference as follows. Let the Northward measurements be chosen ten one, ten 2, ..., xNorth . Let the average of the N values be called 10 . δ x i = x i − x , for i = 1, 2, , N .

In our previous instance, the average width x d = 0.086 cm. southward = x ± 2 s, The boilerplate deviation is:

The boilerplate deviation is:

= 0.12 cm.

(0.xiv)two + (0.04)ii + (0.07)2 + (0.17)two + (0.01)2 5 − 1

Figure one

Standard Difference of the Hateful (Standard Error)

When we written report the average value of Due north measurements, the incertitude we should associate with this average value is the standard deviation of the hateful, often called the standard error (SE).

( 9 )

σ 10 =

south

North

The standard mistake is smaller than the standard deviation past a factor of 1/ Average paper width = 31.19 ± 0.05 cm.

.

N

.

v

Dissonant Information

The beginning step you should take in analyzing data (and even while taking information) is to examine the data set as a whole to await for patterns and outliers. Anomalous data points that lie outside the full general trend of the data may propose an interesting phenomenon that could lead to a new discovery, or they may simply be the issue of a mistake or random fluctuations. In any example, an outlier requires closer examination to determine the crusade of the unexpected outcome. Farthermost information should never be "thrown out" without clear justification and explanation, because you may exist discarding the well-nigh significant part of the investigation! Still, if you tin clearly justify omitting an inconsistent data bespeak, so you should exclude the outlier from your analysis so that the average value is not skewed from the "true" mean.

Partial Dubiety Revisited

When a reported value is determined past taking the boilerplate of a set of contained readings, the fractional doubtfulness is given by the ratio of the uncertainty divided past the average value. For this example,

( ten )

Fractional uncertainty = = = 0.0016 ≈ 0.2%

Notation that the fractional incertitude is dimensionless just is oft reported equally a percentage or in parts per million (ppm) to emphasize the fractional nature of the value. A scientist might too make the statement that this measurement "is good to about 1 part in 500" or "precise to about 0.2%". The partial dubiety is also important considering it is used in propagating dubiousness in calculations using the result of a measurement, as discussed in the next section.

Propagation of Uncertainty

Suppose we desire to determine a quantity f, which depends on x and peradventure several other variables y, z, etc. We want to know the error in f if we measure ten, y, ... with errors σ 10 , σ y , ... Examples:

( eleven )

f = xy (Area of a rectangle)

( 12 )

f = p cos θ ( x -component of momentum)

( 13 )

f = x / t (velocity)

For a unmarried-variable function f(x), the difference in f can be related to the difference in ten using calculus:

( 14 )

δ f =

Thus, taking the square and the boilerplate:

( 15 )

δ f 2 =

δ x 2

2

and using the definition of σ , we get:

( sixteen )

σ f =

Examples: (a) f =

ten

( 17 )

=

1 ii

x

( 18 )

σ f =

, or = σ x 2

x

(b) f = ten 2

(c) f = cos θ

( 22 )

σ f = |sin θ | σ θ , or = |tan θ | σ θ Note : in this situation, σ θ must be in radians.

In the example where f depends on two or more variables, the derivation higher up can be repeated with minor modification. For ii variables, f(x, y), we take:

The partial derivative means differentiating f with respect to x property the other variables fixed. Taking the square and the average, we become the law of propagation of uncertainty:

If the measurements of x and y are uncorrelated, and so δ x δ y = 0,

Examples: (a) f = x + y

( 27 )

∴ σ f =

σ x 2 + σ y 2

When adding (or subtracting) independent measurements, the absolute incertitude of the sum (or difference) is the root sum of squares (RSS) of the individual absolute uncertainties. When adding correlated measurements, the dubiousness in the result is merely the sum of the absolute uncertainties, which is ever a larger doubtfulness estimate than calculation in quadrature (RSS). Calculation or subtracting a constant does not change the absolute doubtfulness of the calculated value every bit long as the constant is an verbal value.

(b) f = xy

( 29 )

∴ σ f =

y 2 σ x 2 + 10 two σ y 2

Dividing the previous equation past f = xy, we become:

(c) f = x / y

Dividing the previous equation by f = 10 / y ,

When multiplying (or dividing) independent measurements, the relative uncertainty of the product (quotient) is the RSS of the individual relative uncertainties. When multiplying correlated measurements, the dubiousness in the upshot is merely the sum of the relative uncertainties, which is e'er a larger incertitude estimate than adding in quadrature (RSS). Multiplying or dividing by a abiding does not alter the relative uncertainty of the calculated value.

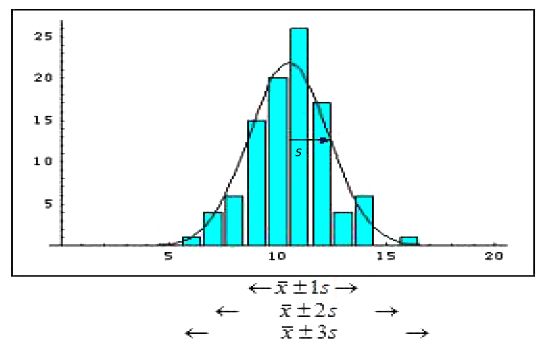

Note that the relative uncertainty in f, as shown in (b) and (c) above, has the same form for multiplication and partition: the relative incertitude in a product or quotient depends on the relative uncertainty of each individual term. Example: Find doubt in v, where v = at

( 34 )

= = =

= 0.031 or 3.one%

(0.010)two + (0.029)two

Discover that the relative uncertainty in t (2.9%) is significantly greater than the relative incertitude for a (ane.0%), and therefore the relative doubtfulness in v is essentially the same every bit for t (about three%). Graphically, the RSS is like the Pythagorean theorem:

Figure 2

The total doubt is the length of the hypotenuse of a right triangle with legs the length of each uncertainty component.

Timesaving approximation: "A concatenation is only every bit potent as its weakest link."

If 1 of the uncertainty terms is more than 3 times greater than the other terms, the root-squares formula can be skipped, and the combined uncertainty is but the largest uncertainty. This shortcut can save a lot of fourth dimension without losing any accurateness in the approximate of the overall uncertainty.

The Upper-Lower Bound Method of Doubtfulness Propagation

An alternative, and sometimes simpler procedure, to the tedious propagation of uncertainty law is the upper-lower bound method of uncertainty propagation. This alternative method does non yield a standard uncertainty estimate (with a 68% confidence interval), but information technology does requite a reasonable estimate of the dubiety for practically whatever situation. The bones idea of this method is to use the uncertainty ranges of each variable to calculate the maximum and minimum values of the part. Y'all can also think of this process every bit examining the best and worst case scenarios. For example, suppose yous measure an angle to exist: θ = 25° ± ane° and you needed to detect f = cos θ , then:

( 35 )

f max = cos(26°) = 0.8988

( 36 )

f min = cos(24°) = 0.9135

( 37 )

∴ f = 0.906 ± 0.007

Note that even though θ was only measured to 2 significant figures, f is known to 3 figures. By using the propagation of uncertainty police: σ f = |sin θ | σ θ = (0.423)( π /180) = 0.0074

The uncertainty estimate from the upper-lower bound method is generally larger than the standard uncertainty estimate institute from the propagation of dubiousness law, but both methods will requite a reasonable gauge of the uncertainty in a calculated value.

The upper-lower bound method is peculiarly useful when the functional human relationship is not clear or is incomplete. Ane practical application is forecasting the expected range in an expense upkeep. In this case, some expenses may be fixed, while others may be uncertain, and the range of these uncertain terms could exist used to predict the upper and lower bounds on the total expense.

Significant Figures

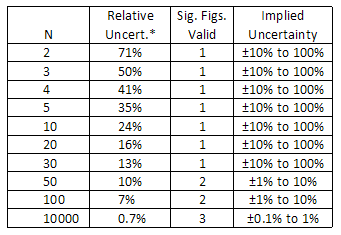

The number of significant figures in a value can exist divers as all the digits between and including the kickoff non-zero digit from the left, through the last digit. For example, 0.44 has ii meaning figures, and the number 66.770 has 5 significant figures. Zeroes are significant except when used to locate the decimal point, every bit in the number 0.00030, which has 2 significant figures. Zeroes may or may not be significant for numbers similar 1200, where information technology is non articulate whether two, three, or iv significant figures are indicated. To avoid this ambivalence, such numbers should be expressed in scientific notation to (e.g. 1.20 × 10iii conspicuously indicates 3 significant figures). When using a figurer, the brandish volition ofttimes evidence many digits, only some of which are meaningful (significant in a different sense). For example, if you want to estimate the expanse of a round playing field, you might pace off the radius to exist nine meters and employ the formula: A = π r ii. When yous compute this area, the calculator might written report a value of 254.4690049 1000ii. It would exist extremely misleading to study this number as the surface area of the field, because it would suggest that you know the area to an absurd caste of precision—to inside a fraction of a foursquare millimeter! Since the radius is only known to one significant figure, the final answer should also contain only one pregnant figure: Surface area = 3 × 10two one thousandii. From this instance, we can see that the number of significant figures reported for a value implies a certain degree of precision. In fact, the number of significant figures suggests a rough estimate of the relative dubiety: The number of significant figures implies an approximate relative dubiety:

1 significant figure suggests a relative uncertainty of most x% to 100%

2 pregnant figures suggest a relative uncertainty of about ane% to 10%

iii significant figures suggest a relative uncertainty of virtually 0.1% to one%

Use of Significant Figures for Simple Propagation of Uncertainty

Past following a few simple rules, significant figures tin can be used to find the appropriate precision for a calculated result for the four most basic math functions, all without the use of complicated formulas for propagating uncertainties.

For multiplication and sectionalization, the number of meaning figures that are reliably known in a production or quotient is the same as the smallest number of meaning figures in whatsoever of the original numbers.

Example:

half-dozen.6 × 7328.7 48369.42 = 48 × 10iii

(2 significant figures) (five pregnant figures) (two significant figures)

For addition and subtraction, the outcome should exist rounded off to the concluding decimal place reported for the least precise number.

Examples:

223.64 5560.5 + 54 + 0.008 278 5560.5

Uncertainty, Significant Figures, and Rounding

For the same reason that it is dishonest to report a result with more significant figures than are reliably known, the uncertainty value should as well not exist reported with excessive precision. For example, it would be unreasonable for a student to report a issue similar:

( 38 )

measured density = eight.93 ± 0.475328 grand/cm3 WRONG!

The incertitude in the measurement cannot possibly be known and then precisely! In most experimental work, the conviction in the uncertainty guess is non much better than about ±50% considering of all the various sources of error, none of which can be known exactly. Therefore, dubiety values should be stated to only one meaning effigy (or perhaps 2 sig. figs. if the first digit is a 1). Because experimental uncertainties are inherently imprecise, they should be rounded to ane, or at most 2, significant figures. = measured density = 8.ix ± 0.5 g/cm3. *The relative doubtfulness is given past the approximate formula:

*The relative doubtfulness is given past the approximate formula:

i

2(N − 1)

An experimental value should be rounded to be consequent with the magnitude of its uncertainty. This generally ways that the last significant figure in whatsoever reported value should be in the aforementioned decimal identify equally the doubt.

In most instances, this exercise of rounding an experimental result to exist consistent with the incertitude estimate gives the same number of significant figures every bit the rules discussed earlier for simple propagation of uncertainties for calculation, subtracting, multiplying, and dividing.

Circumspection: When conducting an experiment, it is important to keep in mind that precision is expensive (both in terms of time and material resources). Exercise not waste product your time trying to obtain a precise result when merely a crude judge is required. The cost increases exponentially with the amount of precision required, and so the potential benefit of this precision must be weighed against the extra cost.

Combining and Reporting Uncertainties

In 1993, the International Standards Organization (ISO) published the first official worldwide Guide to the Expression of Incertitude in Measurement. Before this fourth dimension, uncertainty estimates were evaluated and reported according to different conventions depending on the context of the measurement or the scientific subject area. Here are a few primal points from this 100-folio guide, which can exist plant in modified course on the NIST website. When reporting a measurement, the measured value should be reported forth with an estimate of the total combined standard uncertainty U c

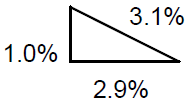

Conclusion: "When do measurements concord with each other?"

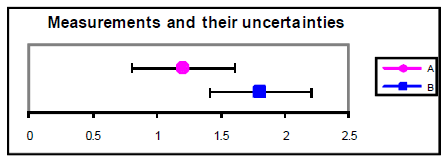

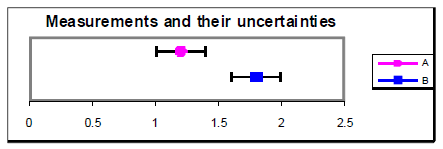

We at present take the resources to answer the fundamental scientific question that was asked at the beginning of this error assay discussion: "Does my issue agree with a theoretical prediction or results from other experiments?" More often than not speaking, a measured upshot agrees with a theoretical prediction if the prediction lies within the range of experimental uncertainty. Similarly, if ii measured values have standard doubt ranges that overlap, then the measurements are said to be consistent (they agree). If the incertitude ranges do not overlap, then the measurements are said to be discrepant (they do not hold). Still, you should recognize that these overlap criteria can give two opposite answers depending on the evaluation and confidence level of the uncertainty. It would be unethical to arbitrarily inflate the uncertainty range just to make a measurement concord with an expected value. A better procedure would be to talk over the size of the difference between the measured and expected values within the context of the uncertainty, and try to discover the source of the discrepancy if the difference is truly significant. To examine your own data, yous are encouraged to use the Measurement Comparison tool bachelor on the lab website. Here are some examples using this graphical analysis tool:

Figure 3

A = i.2 ± 0.4 B = one.8 ± 0.iv

Figure 4

An culling method for determining agreement betwixt values is to calculate the deviation betwixt the values divided by their combined standard uncertainty. This ratio gives the number of standard deviations separating the 2 values. If this ratio is less than 1.0, so information technology is reasonable to conclude that the values concord. If the ratio is more than 2.0, and then it is highly unlikely (less than about 5% probability) that the values are the same. Example from higher up with u = 0.4: = 1.i. u = 0.2: = 2.ane.

References

Baird, D.C. Experimentation: An Introduction to Measurement Theory and Experiment Design, 3rd. ed. Prentice Hall: Englewood Cliffs, 1995. Bevington, Phillip and Robinson, D. Data Reduction and Error Analysis for the Physical Sciences, twond. ed. McGraw-Hill: New York, 1991. ISO. Guide to the Expression of Dubiousness in Measurement. International Organization for Standardization (ISO) and the International Committee on Weights and Measures (CIPM): Switzerland, 1993. Lichten, William. Information and Error Assay., 2nd. ed. Prentice Hall: Upper Saddle River, NJ, 1999. NIST. Essentials of Expressing Measurement Dubiousness. http://physics.nist.gov/cuu/Uncertainty/ Taylor, John. An Introduction to Mistake Analysis, iind. ed. University Science Books: Sausalito, 1997.

Source: https://www.webassign.net/question_assets/unccolphysmechl1/measurements/manual.html

Posted by: myersseencephe.blogspot.com

0 Response to "How To Find Percentage Error In Physics"

Post a Comment